Blog Posts as part of the Open Unviersity Course, H817, that I undertood in 2016.

Last Impressions of H817

OK so I submitted my final end of module assessment - fingers crossed. There’s no need for me to post this blog but I guess it’s a virtual way of me saying ‘Cheerio’ to the H817 experience (assuming I pass).

Where are my peers?

What I really enjoyed about the previous MAODE courses that I’ve done until this one, has been the constant interraction that I was able to get from people on the course and particularly those assigned to my tutorial group. Sadly for one reason or another that did not happen on this course. I did however, have a lot of interraction with one of my peers as we were the only ones remaining for the Module 3 learning artefact that we had to produce (thanks Steve!); so I guess what I missed on one sense, I made up for with a lot more interraction with one individual.

Somehow on the way OU decided tweaked my main correspondence address from the one that I’ve been using with them since 2006, to one that they assigned to me with an @student.open.ac.uk account (never used it, never even told that it existed) so I suspect that the more frequent contact that I had with my tutor on the other courses, compared to this course, can be explained that way. Having said that, it’s pretty awesome that my tutor was able to offer an alternative personal email address and give me a mobile number that I could send text messages to (thanks Victoria).

Practice what one preaches

I still find that the overall academic insight about open and distance education in the Open University to be very rich and deep, but it still drives me bananas that they cannot seem to put into practice, what they are actually preaching about. I mean for goodness sake they are a (justifiably) premiere open and distance learning institution, promoting specifically about the research and best practice about open and distance education but they still seem to be stuck in a practice that harks from the early 2000’s. For instance:

- Tutor group discussions are totally closed with no real ability to cut in outside code or sources to enhance or illustrate the discussion. This penalises particularly those of us that prefer a PLN/PLE and have set up our own blog sites.

- We learn about learning analytics and we are told that OU is using them, but we're not able to see the learning analytics generated by ourselves as individual students.

- there appears to be little incentive or promotion of having students comment on each other's discussion posts, or engage in a suitable discussion even though the research we're pointed to demonstrates convincingly that learning is significantly enhanced through interraction and discussion!

Overall Positive or Negative Impression?

Much of the course had me grinding my teeth, partly because I felt that the work and assignments were too formulaic and thus too restrictive. Much of the ‘innovations’ seemed like the course designers were reaching a bit too far and really there is nothing there at the moment.

The Learning Design section I felt was a good example of doing something more or less for the sake of doing it. I understand the concept but in reality it’s only half baked and cannot be fruitfully applied to help programme designers, or evaluators to figure out whether real learning is or has taken place. I think the ‘modular’ approach to education has merits, but in the end does not work as well as a thoughtfully integrated curriculum and the learning design is the right idea but particularly in ‘soft’ subjects (i.e. not maths or physics), tends to be hard to apply.

Learning analytics seems to be an ‘iffy’ concept that in the end works if we know (i) what real learning actually is and (ii) we can understand the science of real learning completely – neither of which we can. To say nothing of the idea that learning analytics is currently only working in a very tight virtual ecosystem, namely that of the learning management system (LMS) and I feel personally that much/most learning occurs outside of the LMS.

BUT …

… I have been thinking about this for a few weeks now I think that I’ve been learning a lot from this course. Part of it is what ‘not to do’ but part of it has been because I’ve been forced to think about why I have objections to what has been proposed, suggested, enacted. I am forced to have an opinion, I am forced to consider alternatives - and I guess that’s part of the true learning.

However, I want to end with the things that I did learn, that surprised me and did delight. In particular the work on both OERs and MOOCs has made a big impression. The project that I did with the remaining tutee person (thanks again Steve) is something that I did learn from. And so of course the project was an overall positive experience for me. Thanks (as in the previous two courses) to my tutor for the help and assistance - very much appreciated.

Vision for Learning Analytics - Block 4, Activity 22

Previously I wrote a blog about a paper by Dyckhoff et al (2013) which spoke about learning analytics and action research. We’ve been asked to relook at the goals of learning analytics as they relate to either educators or students but from the list, focus on what learning analytics could be. I suspect this is supposed to also revert back to the blog entry on my own definition of learning analytics, although the constraint is to do it in two sentences.

Learning analytics purpose is to utilise student generated data to help individual students maximise their learning potential by being alerted to potential strategies or avoiding ‘pitfalls’ in their current learning approach(es). Although the data gathered from Learning analytics is highly secure and access is granular, it remains optional to an individual student to opt in/opt out at any time.

I think I cheated by using extra long sentences!

I think this is really a manager’s vision that is biased towards the privacy and data security wellbeing of the learner.

Reference:

Dyckhoff, A.L. et al. (2013) Supporting Action Research with Learning Analytics. Proceedings of the Third International Conference on Learning Analystics and Knowledge (LAK13), New York, ACM, 220-229.

Evaluating Analytics Policy - Block 4, Activity 21

I think reading the OU’s policies and to make sense of it would take probably 20-30 minutes. However, I have to say policies are not the thing that one wants to read about an issue that it’s not totally clear why it should be read and what the potential implications are or could be. I think students (including myself) would tend to just ‘click’ through.

The paper by Slade and Prinsloo (2014), suggests that this would tend to be the case and that most of the comments seem to be of the ‘huh’ variety. The rest was relatively what one would expect BUT there was concern for the purpose to which the data might be used. They highlighted the following student’s concern:

“There’s a huge difference IMO between anonymised data to observe/monitor large scale trends and the “snooping” variety of data collection tracking the individual. I’m happy for any of my data to be used in the former; with the latter I would be uncomfortable about the prospect that it would be used to label and categorise students in an unhelpful or intrusive way”.

Question is how could one inform students that (a) data can/might be collected; (b) it is supposed to help their learning and (c ) they shouldn’t be worried about privacy or security issues because the institution has taken very high precautions to do so?

The issue that I see with most ‘please read these policies and then accept them’ is that they, for legal reasons I guess, have to be so long. I mean when I read a standard operating systems acceptance of terms and conditions for a computer, it is more than 30 pages long.

I mean seriously!

My potential solution would be to somehow just hit students with ‘Are you concerned with your privacy when you study with us?’ huge banner that students have to encounter before accepting. Then perhaps only give them the basic issues that they need to be concerned about AND give them the option to ‘take a chance’ or ‘check out the small print’, in other words let them move through about 4 screens of basic facts (it’s there to help; it’s secure; it’s not used for any other purpose) and then give a link to read the fine detail, or trust the institution and accept then and there.

OU Ethics of Learning Analytics - Block 4, Activity 20

Open University’s policy on ethics, can be summarised thus:

- Useful for identifying students not completing course requirements.

- Identifying students who might be ‘at risk’ based on online behaviour.

- Evaluation of the teaching/learning design.

- Issues about data protection, in line with UK data protection regulations.

Frankly, given this modern day and age of concern about privacy, the regulations seem weak and open to abuse. The OU appears to have a ‘cop out’ policy hiding behind national (UK) regulations. There is however a document that outlines ‘principles’ far better (ie gives more comfort to an OU student) such as:

- OU needs to be responsible in it's use of learning analytics to enhance student success.

- The OU would actively guard against simply using learning analytics to sterotype behaviour and/or passing judgments on students based on previous data analysis.

- OU needs to be transparent on the data it's collecting from the student, and students need to be engaged active agents in the implementation of learning analytics.

- Learning analytics requires sound analysis of data and an acceptance of the values of learning analytics.

I thinkt he last point is a bit dodgy, it seems to say ‘you have to accept learning analytics as a principle if you want to study at OU.’

Implement Ethics Policy in an Institution

With respect to designing and implementing a policy at an organisation (other than it’s probably a good idea to get learning analytics data, even if it’s not clear why/how it would be useful. I think the following should be considered.

- Learning Analytics should not be manadatory but an opt in/opt out policy. Perhaps a policy accpetance should be that students are by default 'opted in' but they have to accept terms and conditions SPECIFICALLY for the use of their data that explains that their data is set up by default to 'opt in' and they can 'opt out' at any time.

- An explanation of how the data is stored to assure students that their data cannot be hacked or identified even if the data is hacked.

- An explanation of which kind of institutional or organisational personnel can access the student's data.

- Easy and free access to the student's on analytical data and IF there is a learning intervention from the institution's staff based on the learning analytics, that the intervention is prefaced with the learning analytics data that the educator used to take a decision to intervene.

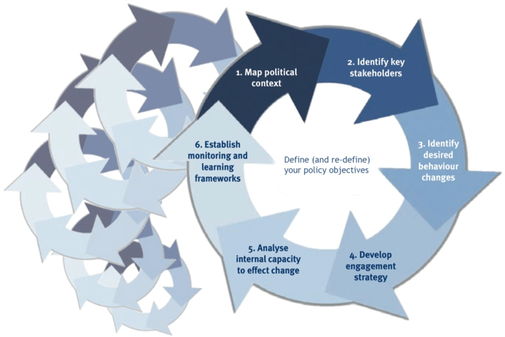

ROMA Reflections - Block 4, Activity 19

This is about figuring out how to map a process of implementing an anlytics programme within an institution.

Called the ROMA model (Rapid Outcome Mapping Approach) with respect to Learning analytics, there are seven steps identified:

- Define a clear set of overarching policy objectives

- Map the context

- Identify the key stakeholders

- Identify learning analytics purposes

- Develop a strategy

- Analyze capacity; develop human resources

- Develop a monitoring and learning system (evaluation)

In the previous post Numbers are not enough to change, I argued that learning analytics is a ‘hard sell’ mainly because I have not yet found any convincing evidence that it can be used in a way to support and enhance learning beyond what a conscientious tutor might already do with a simple tick box spreadsheet, if the learning design is relatively serial programmed (step A proceeds before step B etc). So with respect to points 1-3, it’s possible to answer these questions on faith that somehow learning analytics will provide overall good; but on points 4-7 I am not sure how that can be legitimately applied given that there’s no strong empirical evidence to suggest that it works.

In the post Beyond Prototypes, the issue remains the same that strong empirical evidence for the benefits for student learning as a result of learning analytics, seems scant, or absent. However, the ROMA model (as in any iterative plan-implement-lessons learned cycle), benefits from learning from any starting point and hence could be the very thing that allows the implementation to address the concerns felt by the identified communities within the institution (student, educators, technical and the overall ecology of practices).

Reference:

Ferguson, R. et al. (2014) Setting Learning Analytics in Context: Overcoming the Barriers to Large-Scale Adoption. Journal of Learning Analytics, 1, 120-144.

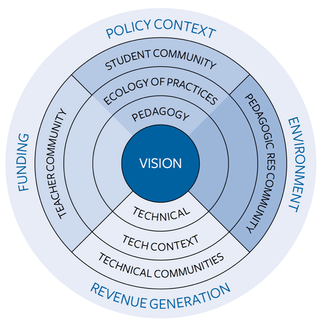

Beyond Prototypes - Block 4, Activity 18

So we’ve been asked to imagine that the management team’s vision of an educational institution is that, in the future, they will be able to claim on their website that ‘learning and teaching at this institution are supported by learning analytics’. However, since the management team is not sure what would be involved in implementing a change to support this statement, they cannot clearly state what changes and developments that might be required in order to support this claim.

Student Community

The student body including undergraduate and postgraduate bodies would need to know feel secure that they analytics were solely being used to support their learning and that they were not being prejudiced against because of the analytics. Privacy issues are of a major concern.

Teacher Community & Pedagogic Resource Community

Educators and others involved in pedagogic practice or design, would need to be able to know what analytics are, how they are aligned with learning design, how they can be implemented and privacy concerns etc addressed, how they can be monitored and assessed, and finally how they can be used effectively to enhance teaching/learning. This group is perhaps the one that would be the hardest to convince and/or train up – essentially they need to be convinced that the learning curve and effort will more than pay off what they are currently doing.

Technical Communities & Technical content

Working with the teaching and pedagogic practices that can gather the data, protice privacy and yet be easily understood by both educators and students alike. The hardest part is perhaps to gather the data in a way that is encrypted, but is still of utility to the individual student.

Ecology of Practices

Essentially changing the mindset of the whole institution, including the Vice Chancellor, or CEO of an institution that the learning analystics are an intergral part of the learning ecology, not just an interesting ‘technical experiment’. Some sort of overview body might be required to ensure that the analystics are fulfiling their function and may need to be re-evaluated in light of new technical developments in the technology enhance learning (TEL) arena. This is probaly the ulimate conceptual space in which learning analytics will either fail or succeed.

I think the wording is adequate.

Reference to the Headings above from the report:

Scanlon, E. et al. (2013) Beyond Prototypes: Enabling Innovation in Technology-enhanced Learning. (Online). Available at http://beyondprototypes.com/ (Accessed 3rd August 2016).

Numbers not enough to change - Block 4, Activity 17

Macfadyen and Dawson (2012), argue in their paper Numbers are not Enough, that despite learning analytics being presented to peer staff members, they were not convinced enough to take up the offerings of their institution’s ‘Learning Management System’ and then alter or adapt their teaching practices according to the information the analytics could provide. They offer a number of reasons why this probably did not occur including:

- perceived gain, or lack of gain in adopting new process

- lack of time (linked to above)

- decision makers being 'out of the loop' in getting most of technologies

- loss of control at a departmental level to a central authority (the LMS)

What I cannot fathom though is that this paper makes no real reference to the efficacy of the LMS in helping staff members to teach, or even better, their students to learn better. It’s all taken as a matter of fact that this will enhance their learning.

I remember this happening at a university that I used to work at where a commercial LMS was being implemented. What the implementing team seemed to forget was that there is a learning curve to using the technology, and there was no demonstrable gain.

I think it is true that the paper that is cited (Rogers, 1995) that deals with resistance to innovation and change, is broadly speaking correct, it is also true to say that many innovations were broadly proclaimed as ‘the next big thing’ and then nothing really came of it. In the same university that I mentioned above, there was a massive push to make all the lectures be available online, or on demand by basically videoing lectures. What the implementers forgot was that there’s nothing quite like looking at a talking head for 60 minutes to send you into the land of nod (zzzzzzzzzzzzzzzzz).

References:

Macfadyen, L.P. & Dawson, S. (2012) Numbers Are Not Enough. Why e-Learning Analytics Failed to Inform an Institutional Strategic Plan. Educational Technology & Society, 15, 149-163.

Rogers, E. M. (1995). Diffusion of Innovations (4th ed.). New York: Free Press.

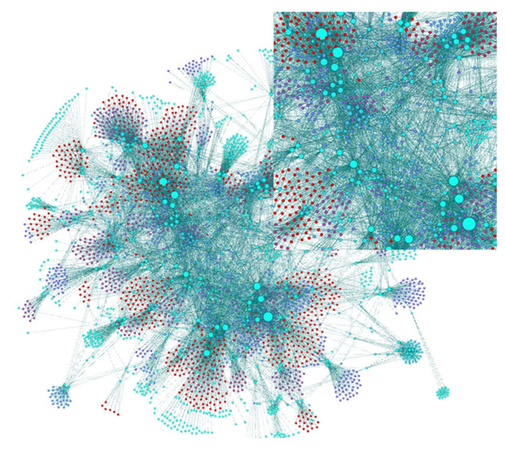

Citation Networks - Block 4, Activity 15

This exercise asked us to consider reading a paper by Dawson et al (2014) the jist of which was a paper that analysed the kind of research and kind of researchers were interested in and were actively engaged in studying learning analytics.

The practical implications of their research was that learning analytics was increasingly part of a topic in learning disciplines; that more effort needs to be put into empirical studies; and that cross collaboration with other data collecting resources, would be fruitful.

Despite what look like impressive analytics of the kind of figures and pictures that suggest analytic data, I do NOT see this as an example of learning analytics. I see it as research analytics: the research topic happens to be about learning analytics.

It is using the methodology of citation indices to shed light on the kind of information is being promoted or thought as important at a particular point or slice in time of a research arena (think ’Science Citation Index', or 'Social Science Citation Index’).

The disparity of the citation indices of research in either the specialised proceedings of the International Conference on Learning Analytics and Knowledge (between 10-16) vs. those in broader fields (Google Scholar citation) shows that the field is very new since those in the proceedings tend to be cited almost the same number of times, vs. large differences in the broader fields (from 25 citations through to more than 16,600).

The authors are keen to point out that learning analytics only provides part of a solution:

Learning analytics to date has served to identify a condition, but has not advanced to deal with the learning challenges in a more nuanced and integrated manner.

We were asked to read this paper in different sections and out of order from the main paper. Did it make a difference to how I interpreted the paper by missing out some sections? I don’t think so, but then this is how I read many papers anyhow for my other research arenas. In this sense the programme helped to identify which sections to focus on, whereas by oneself one needs to consider how to identify the relevant sections oneself.

Or what am I missing?

References:

Dawson, S. et al. (2014) Current State and Future Trends: A Citation Network Analysis of the Learning Analytics Field. Proceedings of the Fourth International Conference on Learning Analytics and Knowledge, New York, ACM Press, 231-240.

Why Soc. Learning Analytics - Block 4, Activity 13

We were asked to produce a slideshow on the definition, utility and usage of social learning analytics. I had heard about and read reviews of Prezi.com a online slideshow making piece of software that tends to have an enormous palate onto which you can place any number of elements. A flow allows one to ‘zoom’ to any of the elements on this virtually unlimited canvas. The result can be a bit dizzying for the audience as the transition from one element to another tends to be rather abrupt and perhaps on a projector can give a sense of nausea.

So I decided to try my hand at creating a slideshow using Prezi and here it is:

Advise on Learning Analytics Use H817 - Block 4, Activity 12

If not already being used, then the advise would be to use learning analytics in the Open Education block of the H817 course. It appears in this Block 2 of the H817 programme (similarly for Block 1 & 4 - this current Block) that the main educational pedagogy of the course is to access material and then to comment on the material and hopefully engage in a discussion about those comments with other members of the tutorial group.

Checkpoint Analytics: can be used to simply see if students have accessed the suggested resources that are required in order to make the commentary.

Process Analytics: to be used to figure out who is posting a comment and perhaps as importantly, who is commenting on those comments.

However, what is not clear (to me) is what the consequences of these learning analytics should/could be. Myself for example, I’ve been normally behind (according to the time line) but have not been contacted to say ‘is everything ok?’ or ‘are you joining us?’. Of course that would have been easily answered (‘yes’ & ‘yes’) and just because there is no interaction on the discussion boards this does not mean that learning is not occurring.

Learning Analytics and the LMS of a Learning Institution

This seems to be bring up a fundamental issue with learning analytics and that is that it seems to me that they currently require students to be using the LMS of the learning institution (OU’s Moodle in H817). I personally find that the Moodle environment to be a design nightmare and try to spend as much time out of it as possible. Most web designers have figured out that the more a user has to ‘click’ to access information, or contribute to information, the less likely they are to do so. There are a number of members of the tutorial group (myself included) that host their own blogs but it’s nigh impossible to ‘cut’ that blog into the formal H817 tutorial discussion boards (or at least it’s not easy and I have not found a way yet). Thus it takes extra effort to comment on the blogs of others AND of course for others to comment on mine. With 5 weeks to go before the end of the course, I haven’t had a single comment on this blog and there are (including this one) 32 postings and I’ve cut the link into I think all of them or almost all of them on the discussion board.

This sounds like a complaint, or a whinge. Of course I might not have that much to comment on (heh heh) but that is not my point.

My point is that if learning analytics requires measurement of interaction and access to each other, then for the moment it seems as if it can only work by buying into the LMS of the institution.